“Eyevan” is a multi-disciplinary work created as part of my university dissertation with the aim of investigating if an interactive installation can be affective.

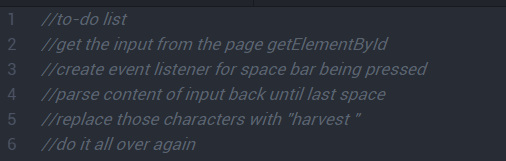

The installation involves using cycling74’s Max software for blob tracking, and a javascript library called p5.js to render the output.

The user(s) – up to 3 people at a time, wear headbands with infrared LEDs on them and a camera with an IR pass filter installed internally produces an image that is trackable with Max. This tracking info is then send to the JS running in the browser using socket.io as a bridge.

A webapp is running in the browser from a local node server, this is where the p5.js library is used. Programmable shapes allow the eyes to be animated programmatically an respond to the people in the room. The eyes in this case have no inherit emotions of their own, because the eyes have a low level of visual detail it is the user themselves who applies the emotion to the eyes as they are viewing them. Therefor the installation is affective.

As part of this project I compared 2D and 3D animating techniques for the eyes. p5.js has 3D rendering capabilities utilizing webGL. This determined that 2D made more sense for the desired outcome and was easier to animate in the desired way.

I wanted to challenge myself with this project, at the begining when I was pitching the concept to the rest of my class I had a sort or idea of how I would make each part and what software I’d use but nothing concrete. I enjoyed learning to use p5.js and max in new ways and was rewarded with a great feeling when everything eventually worked.

A part of this project that I didn’t imagine would be as big a part was the computer vision element. I ended up removing the IR cut filter from an xbox camera and replacing it with a piece of LEE 87 IR pass filter material to create a camera that could see the IR LEDs in the headbands mentioned before. see this other post for more on that

Maybe at some point I’ll make the code available here or on github, I’d need to tidy everything, especially the max patch.

A mention need to go to this github repo where I found the solution to getting data from max into javascript in an open browser window.